ai学术论文大全(爱可可AI论文推介)

LG - 机器学习 CV - 计算机视觉 CL - 计算与语言

1、 [CV]*GRF: Learning a General Radiance Field for 3D Scene Representation and Rendering

A Trevithick, B Yang

[Williams College & University of Oxford]

仅通过2D观察在单一网络中表示和渲染任意复杂3D场景的隐式神经函数(GRF),利用多视图几何原理,从观察到的二维视图获得内在表示,将二维像素特征精确映射到三维空间,保证学习到的隐式表示有意义,并在多视图间保持一致,利用注意力机制,隐式解决视觉遮挡问题。利用GRF,可合成真实的二维新视图。

We present a simple yet powerful implicit neural function that can represent and render arbitrarily complex 3D scenes in a single network only from 2D observations. The function models 3D scenes as a general radiance field, which takes a set of posed 2D images as input, constructs an internal representation for each 3D point of the scene, and renders the corresponding appearance and geometry of any 3D point viewing from an arbitrary angle. The key to our approach is to explicitly integrate the principle of multi-view geometry to obtain the internal representations from observed 2D views, guaranteeing the learned implicit representations meaningful and multi-view consistent. In addition, we introduce an effective neural module to learn general features for each pixel in 2D images, allowing the constructed internal 3D representations to be remarkably general as well. Extensive experiments demonstrate the superiority of our approach.

https://weibo.com/1402400261/JoWaVvpPs

2、[LG]*No MCMC for me: Amortized sampling for fast and stable training of energy-based models

W Grathwohl, J Kelly, M Hashemi, M Norouzi, K Swersky, D Duvenaud

[Google Research & University of Toronto]

大规模训练基于能量模型(EBM)的简单方法(VERA),用熵正则化发生器摊平用于EBM训练的MCMC采样,采用快速变分近似改进基于MCMC的熵正则化方法。将估计器应用到联合能量模型(JEM)中,在性能保持不变的情况下训练速度大大加快。

Energy-Based Models (EBMs) present a flexible and appealing way to representuncertainty. Despite recent advances, training EBMs on high-dimensional dataremains a challenging problem as the state-of-the-art approaches are costly, unstable, and require considerable tuning and domain expertise to apply successfully. In this work we present a simple method for training EBMs at scale which uses an entropy-regularized generator to amortize the MCMC sampling typically usedin EBM training. We improve upon prior MCMC-based entropy regularization methods with a fast variational approximation. We demonstrate the effectiveness of our approach by using it to train tractable likelihood models. Next, we apply our estimator to the recently proposed Joint Energy Model (JEM), where we matchthe original performance with faster and stable training. This allows us to extend JEM models to semi-supervised classification on tabular data from a variety of continuous domains.

https://weibo.com/1402400261/JoWkvdtVw

3、[LG]*Metrics and methods for a systematic comparison of fairness-aware machine learning algorithms

G P. Jones, J M. Hickey, P G. D Stefano, C Dhanjal, L C. Stoddart, V Vasileiou

[Experian DataLabs UK&I and EMEA]

公平感知机器学习算法的系统比较,对一些普遍适用于监督分类的公平性算法进行了系统比较,评价了28种不同模型,包括7种非公平(基准)机器学习算法和由8种公平方法驱动的20种公平感知模型。使用3个决策阈值策略、7个数据集、2个公平性指标和3个预测性能指标,对公平感知机器学习算法的公平性、预测性能、校准质量和速度进行评价。

Understanding and removing bias from the decisions made by machine learning models is essential to avoid discrimination against unprivileged groups. Despite recent progress in algorithmic fairness, there is still no clear answer as to which bias-mitigation approaches are most effective. Evaluation strategies are typically use-case specific, rely on data with unclear bias, and employ a fixed policy to convert model outputs to decision outcomes. To address these problems, we performed a systematic comparison of a number of popular fairness algorithms applicable to supervised classification. Our study is the most comprehensive of its kind. It utilizes three real and four synthetic datasets, and two different ways of converting model outputs to decisions. It considers fairness, predictive-performance, calibration quality, and speed of 28 different modelling pipelines, corresponding to both fairness-unaware and fairness-aware algorithms. We found that fairness-unaware algorithms typically fail to produce adequately fair models and that the simplest algorithms are not necessarily the fairest ones. We also found that fairness-aware algorithms can induce fairness without material drops in predictive power. Finally, we found that dataset idiosyncracies (e.g., degree of intrinsic unfairness, nature of correlations) do affect the performance of fairness-aware approaches. Our results allow the practitioner to narrow down the approach(es) they would like to adopt without having to know in advance their fairness requirements.

https://weibo.com/1402400261/JoWrbwlC0

4、[CL]Automatic Extraction of Rules Governing Morphological Agreement

A Chaudhary, A Anastasopoulos, A Pratapa, D R. Mortensen, Z Sheikh, Y Tsvetkov, G Neubig

[CMU & George Mason University]

从给定语言的原始文本自动提取形态一致性规则,发布了55种语言的提取规则。该方法先对原始文本进行句法分析,预测词性标签、依存句法分析和词法特征,在这些分析数据的基础上学习协议预测模型(决策树)。

Creating a descriptive grammar of a language is an indispensable step for language documentation and preservation. However, at the same time it is a tedious, time-consuming task. In this paper, we take steps towards automating this process by devising an automated framework for extracting a first-pass grammatical specification from raw text in a concise, human- and machine-readable format. We focus on extracting rules describing agreement, a morphosyntactic phenomenon at the core of the grammars of many of the world's languages. We apply our framework to all languages included in the Universal Dependencies project, with promising results. Using cross-lingual transfer, even with no expert annotations in the language of interest, our framework extracts a grammatical specification which is nearly equivalent to those created with large amounts of gold-standard annotated data. We confirm this finding with human expert evaluations of the rules that our framework produces, which have an average accuracy of 78%. We release an interface demonstrating the extracted rules at this https URL.

https://weibo.com/1402400261/JoWwLeUX9

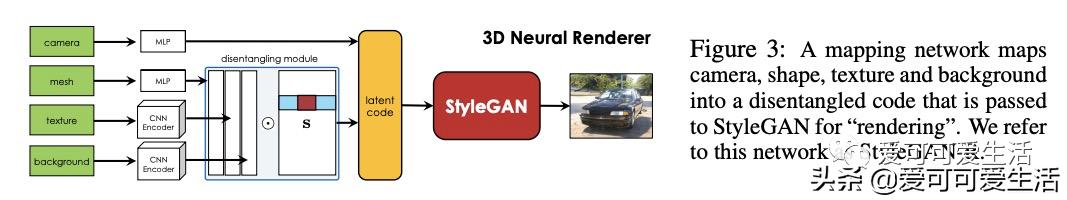

5、[CV]Image GANs meet Differentiable Rendering for Inverse Graphics and Interpretable 3D Neural Rendering

(Under review as a conference paper at ICLR 2021)

将生成模型与可微渲染结合提取并解缠生成式图像合成模型学到的三维知识,利用GAN作为多视图数据生成器,用可微渲染器训练逆图形网络,将训练好的逆图形网络作为教师,将GAN潜代码解缠为可解释的3D属性,用循环一致性损失迭代训练整个网络结构。可获得显著高质量的三维重建结果,而需要的注释工作比标准数据集少10000次。

Differentiable rendering has paved the way to training neural networks to perform “inverse graphics” tasks such as predicting 3D geometry from monocular photographs. To train high performing models, most of the current approaches rely on multi-view imagery which are not readily available in practice. Recent Generative Adversarial Networks (GANs) that synthesize images, in contrast, seem to acquire 3D knowledge implicitly during training: object viewpoints can be manipulated by simply manipulating the latent codes. However, these latent codes often lack further physical interpretation and thus GANs cannot easily be inverted to perform explicit 3D reasoning. In this paper, we aim to extract and disentangle 3D knowledge learned by generative models by utilizing differentiable renderers. Key to our approach is to exploit GANs as a multi-view data generator to train an inverse graphics network using an off-the-shelf differentiable renderer, and the trained inverse graphics network as a teacher to disentangle the GAN's latent code into interpretable 3D properties. The entire architecture is trained iteratively using cycle consistency losses. We show that our approach significantly outperforms state-of-the-art inverse graphics networks trained on existing datasets, both quantitatively and via user studies. We further showcase the disentangled GAN as a controllable 3D “neural renderer", complementing traditional graphics renderers.

https://weibo.com/1402400261/JoWJk7MeC?ref=home

,

免责声明:本文仅代表文章作者的个人观点,与本站无关。其原创性、真实性以及文中陈述文字和内容未经本站证实,对本文以及其中全部或者部分内容文字的真实性、完整性和原创性本站不作任何保证或承诺,请读者仅作参考,并自行核实相关内容。文章投诉邮箱:anhduc.ph@yahoo.com