k8s集群用什么方式搭建(快速搭建k8s集群环境)

k8s-node1192.168.56.100

k8s-node2192.168.56.101

k8s-node3192.168.56.102

1.2 新建Vagrantfile文件在电脑创建一个文件夹F:\javatool\virtual\guli,新建一个文件Vagrantfile,Vagrantfile的内容如下:

Vagrant.configure("2") do |config|

(1..3).each do |i|

config.vm.define "k8s-node#{i}" do |node|

# 设置虚拟机的Box

node.vm.box = "centos/7"

# 设置虚拟机的主机名

node.vm.hostname="k8s-node#{i}"

# 设置虚拟机的IP

node.vm.network "private_network", ip: "192.168.56.#{99 i}", netmask: "255.255.255.0"

# 设置主机与虚拟机的共享目录

# node.vm.synced_folder "~/Documents/vagrant/share", "/home/vagrant/share"

# VirtaulBox相关配置

node.vm.provider "virtualbox" do |v|

# 设置虚拟机的名称

v.name = "k8s-node#{i}"

# 设置虚拟机的内存大小

v.memory = 4096

# 设置虚拟机的CPU个数

v.cpus = 4

end

end

end

end

用window的CMD命名在Vagrantfile所在目录执行vagrant up,执行需要等待一段时间,执行日志

F:\javatool\virtual\guli>vagrant up

Bringing machine 'k8s-node1' up with 'virtualbox' provider...

Bringing machine 'k8s-node2' up with 'virtualbox' provider...

Bringing machine 'k8s-node3' up with 'virtualbox' provider...

==> k8s-node1: Importing base box 'centos/7'...

==> k8s-node1: Matching MAC address for NAT networking...

==> k8s-node1: Checking if box 'centos/7' version '2004.01' is up to date...

==> k8s-node1: Setting the name of the VM: k8s-node1

==> k8s-node1: Clearing any previously set network interfaces...

==> k8s-node1: Preparing network interfaces based on configuration...

k8s-node1: Adapter 1: nat

k8s-node1: Adapter 2: hostonly

==> k8s-node1: Forwarding ports...

k8s-node1: 22 (guest) => 2222 (host) (adapter 1)

==> k8s-node1: Running 'pre-boot' VM customizations...

==> k8s-node1: Booting VM...

==> k8s-node1: Waiting for machine to boot. This may take a few minutes...

k8s-node1: SSH address: 127.0.0.1:2222

k8s-node1: SSH username: vagrant

k8s-node1: SSH auth method: private key

k8s-node1:

k8s-node1: Vagrant insecure key detected. Vagrant will automatically replace

k8s-node1: this with a newly generated keypair for better security.

k8s-node1:

k8s-node1: Inserting generated public key within guest...

k8s-node1: Removing insecure key from the guest if it's present...

k8s-node1: Key inserted! Disconnecting and reconnecting using new SSH key...

==> k8s-node1: Machine booted and ready!

==> k8s-node1: Checking for guest additions in VM...

k8s-node1: No guest additions were detected on the base box for this VM! Guest

k8s-node1: additions are required for forwarded ports, shared folders, host only

k8s-node1: networking, and more. If SSH fails on this machine, please install

k8s-node1: the guest additions and repackage the box to continue.

k8s-node1:

k8s-node1: This is not an error message; everything may continue to work properly,

k8s-node1: in which case you may ignore this message.

==> k8s-node1: Setting hostname...

==> k8s-node1: Configuring and enabling network interfaces...

==> k8s-node1: Rsyncing folder: /cygdrive/f/javatool/virtual/guli/ => /vagrant

==> k8s-node2: Importing base box 'centos/7'...

==> k8s-node2: Matching MAC address for NAT networking...

==> k8s-node2: Checking if box 'centos/7' version '2004.01' is up to date...

==> k8s-node2: Setting the name of the VM: k8s-node2

==> k8s-node2: Fixed port collision for 22 => 2222. Now on port 2200.

==> k8s-node2: Clearing any previously set network interfaces...

==> k8s-node2: Preparing network interfaces based on configuration...

k8s-node2: Adapter 1: nat

k8s-node2: Adapter 2: hostonly

==> k8s-node2: Forwarding ports...

k8s-node2: 22 (guest) => 2200 (host) (adapter 1)

==> k8s-node2: Running 'pre-boot' VM customizations...

==> k8s-node2: Booting VM...

==> k8s-node2: Waiting for machine to boot. This may take a few minutes...

k8s-node2: SSH address: 127.0.0.1:2200

k8s-node2: SSH username: vagrant

k8s-node2: SSH auth method: private key

k8s-node2:

k8s-node2: Vagrant insecure key detected. Vagrant will automatically replace

k8s-node2: this with a newly generated keypair for better security.

k8s-node2:

k8s-node2: Inserting generated public key within guest...

k8s-node2: Removing insecure key from the guest if it's present...

k8s-node2: Key inserted! Disconnecting and reconnecting using new SSH key...

==> k8s-node2: Machine booted and ready!

==> k8s-node2: Checking for guest additions in VM...

k8s-node2: No guest additions were detected on the base box for this VM! Guest

k8s-node2: additions are required for forwarded ports, shared folders, host only

k8s-node2: networking, and more. If SSH fails on this machine, please install

k8s-node2: the guest additions and repackage the box to continue.

k8s-node2:

k8s-node2: This is not an error message; everything may continue to work properly,

k8s-node2: in which case you may ignore this message.

==> k8s-node2: Setting hostname...

==> k8s-node2: Configuring and enabling network interfaces...

==> k8s-node2: Rsyncing folder: /cygdrive/f/javatool/virtual/guli/ => /vagrant

==> k8s-node3: Importing base box 'centos/7'...

==> k8s-node3: Matching MAC address for NAT networking...

==> k8s-node3: Checking if box 'centos/7' version '2004.01' is up to date...

==> k8s-node3: Setting the name of the VM: k8s-node3

==> k8s-node3: Fixed port collision for 22 => 2222. Now on port 2201.

==> k8s-node3: Clearing any previously set network interfaces...

==> k8s-node3: Preparing network interfaces based on configuration...

k8s-node3: Adapter 1: nat

k8s-node3: Adapter 2: hostonly

==> k8s-node3: Forwarding ports...

k8s-node3: 22 (guest) => 2201 (host) (adapter 1)

==> k8s-node3: Running 'pre-boot' VM customizations...

==> k8s-node3: Booting VM...

==> k8s-node3: Waiting for machine to boot. This may take a few minutes...

k8s-node3: SSH address: 127.0.0.1:2201

k8s-node3: SSH username: vagrant

k8s-node3: SSH auth method: private key

k8s-node3:

k8s-node3: Vagrant insecure key detected. Vagrant will automatically replace

k8s-node3: this with a newly generated keypair for better security.

k8s-node3:

k8s-node3: Inserting generated public key within guest...

k8s-node3: Removing insecure key from the guest if it's present...

k8s-node3: Key inserted! Disconnecting and reconnecting using new SSH key...

==> k8s-node3: Machine booted and ready!

==> k8s-node3: Checking for guest additions in VM...

k8s-node3: No guest additions were detected on the base box for this VM! Guest

k8s-node3: additions are required for forwarded ports, shared folders, host only

k8s-node3: networking, and more. If SSH fails on this machine, please install

k8s-node3: the guest additions and repackage the box to continue.

k8s-node3:

k8s-node3: This is not an error message; everything may continue to work properly,

k8s-node3: in which case you may ignore this message.

==> k8s-node3: Setting hostname...

==> k8s-node3: Configuring and enabling network interfaces...

==> k8s-node3: Rsyncing folder: /cygdrive/f/javatool/virtual/guli/ => /vagrant

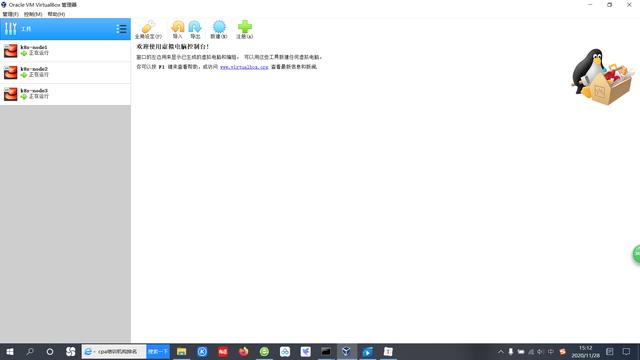

执行完成后打开Virtual,会发现有三台只在运行中的虚拟机,三台虚拟机的名字分别为k8s-node1,k8s-node2,k8s-nod3。

敲入命令vagrant ssh k8s-node1,切换到root用户su root,会出现输入密码选项,初始密码vargant;

使用编辑命令vi /etc/ssh/sshd_config;

将文件中的PasswordAuthentication no改成PasswordAuthentication yes,保存修改并退出;

重启一下sshdservice sshd restart;

执行一次exit;退出root用户;

执行第二次exit;退出当前虚拟机;

F:\javatool\virtual\guli>vagrant ssh k8s-node1

[vagrant@k8s-node1 ~]$ su root

Password:

[root@k8s-node1 vagrant]# vi /etc/ssh/sshd_config

[root@k8s-node1 vagrant]# service sshd restart

Redirecting to /bin/systemctl restart sshd.service

[root@k8s-node1 vagrant]# exit;

exit

[vagrant@k8s-node1 ~]$ exit;

logout

Connection to 127.0.0.1 closed.

重复上面命令在k8s-node2,k8s-node3中执行。

用xshell连上上面三台虚拟机,虚拟机创建结束。

2 k8s集群搭建前置环境设置2.1 查看三台机器的ip route执行命令ip route show

[root@k8s-node1 ~]# ip route show

default via 10.0.2.2 dev eth0 proto dhcp metric 100

10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100

192.168.56.0/24 dev eth1 proto kernel scope link src 192.168.56.100 metric 101

[root@k8s-node2 ~]# ip route show

default via 10.0.2.2 dev eth0 proto dhcp metric 101

10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 101

192.168.56.0/24 dev eth1 proto kernel scope link src 192.168.56.101 metric 100

[root@k8s-node3 ~]# ip route show

default via 10.0.2.2 dev eth0 proto dhcp metric 100

10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100

192.168.56.0/24 dev eth1 proto kernel scope link src 192.168.56.102 metric 101

发现三台虚拟机使用的都是eth0网卡,ip也都是一样的10.0.2.15,这是由于网络默认采用的是网络地址转换(NAT)模式。

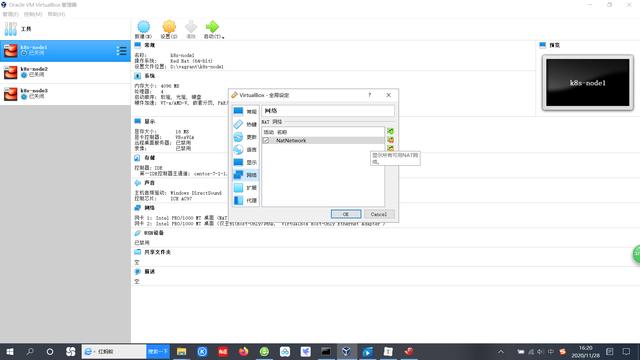

我们需要修改这种网络模式,打开virtual,选择管理->全局设定(P)->网络->新增新NAT网络;

接着需要给每台虚拟机设置网络连接方式,连接方式为NAT网络,界面名称为刚才创建的网络名称,刷新MAC地址

执行命令ip route show

[root@k8s-node1 ~]# ip route show

default via 10.0.2.1 dev eth0 proto dhcp metric 100

10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100

192.168.56.0/24 dev eth1 proto kernel scope link src 192.168.56.100 metric 101

[root@k8s-node2 ~]# ip route show

default via 10.0.2.1 dev eth0 proto dhcp metric 101

10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.4 metric 101

192.168.56.0/24 dev eth1 proto kernel scope link src 192.168.56.101 metric 100

[root@k8s-node3 ~]# ip route show

default via 10.0.2.1 dev eth0 proto dhcp metric 101

10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.5 metric 101

192.168.56.0/24 dev eth1 proto kernel scope link src 192.168.56.102 metric 100

发现三台虚拟的网卡地址不一样了。

三台机器ip地址相互ping一下,测试网络是否通畅;三台机器都ping一下www.baidu.com测试外网是否能ping通。

2.2 设置linux环境

# (1)关闭防火墙

systemctl stop firewalld && systemctl disable firewalld

# (2)关闭selinux

setenforce 0

sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

# (3)关闭swap

swapoff -a

sed -i '/swap/s/^\(.*\)$/#\1/g' /etc/fstab

# (4)配置iptables的ACCEPT规则

iptables -F && iptables -X && iptables -F -t nat && iptables -X -t nat && iptables -P FORWARD ACCEPT

# (5)设置系统参数

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system

添加主机名与IP对应关系

vi /etc/hosts

10.0.2.15 k8s-node1

10.0.2.4 k8s-node2

10.0.2.5 k8s-node3

hostnamectl set-hostname <newhostname>

2.3 安装docker

01 卸载之前的docker

sudo yum remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-engine

02 安装必要的依赖

sudo yum install -y yum-utils \

device-mapper-persistent-data \

lvm2

03 设置docker仓库 [设置阿里云镜像仓库可以先自行百度,后面课程也会有自己的docker hub讲解]

sudo yum-config-manager \

--add-repo \

https://download.docker.com/linux/centos/docker-ce.repo

[访问这个地址,使用自己的阿里云账号登录,查看菜单栏左下角,发现有一个镜像加速器:https://cr.console.aliyun.com/cn-hangzhou/instances/mirrors]

04 安装docker

sudo yum install -y docker-ce docker-ce-cli containerd.io

05 启动docker

sudo systemctl start docker

06 测试docker安装是否成功

sudo docker run hello-world

07 设置docker开机启动

sudo systemctl enable docker

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://22hf0lkd.mirror.aliyuncs.com"]

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum list kubelet --showduplicates | sort -r

2.5.3 docker和k8s设置同一个cgroup

yum install -y kubeadm-1.17.3 kubelet-1.17.3 kubectl-1.17.32.5.4 初始化master节点

# docker vi /etc/docker/daemon.json "exec-opts": ["native.cgroupdriver=systemd"], systemctl restart docker # kubelet,这边如果发现输出directory not exist,也说明是没问题的,大家继续往下进行即可 sed -i "s/cgroup-driver=systemd/cgroup-driver=cgroupfs/g" /etc/systemd/system/kubelet.service.d/10-kubeadm.conf systemctl enable kubelet && systemctl start kubelet官网:https://kubernetes.io/docs/reference/setup-tools/kubeadm/kubeadm/

注意:此操作是在主节点上进行,如果没有镜像先执行master_images.sh

kubeadm init --kubernetes-version=1.17.3 --apiserver-advertise-address=10.0.2.15 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/16 【若要重新初始化集群状态:kubeadm reset,然后再进行上述操作】

#!/bin/bash images=( kube-apiserver:v1.17.3 kube-proxy:v1.17.3 kube-controller-manager:v1.17.3 kube-scheduler:v1.17.3 coredns:1.6.5 etcd:3.4.3-0 pause:3.1 ) for imageName in ${images[@]} ; do docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName k8s.gcr.io/$imageName done ~主节点安装成功日志

Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ Then you can join any number of worker nodes by running the following on each as root: kubeadm join 10.0.2.15:6443 --token njegi6.oj7rc4x6agiu1go2 \ --discovery-token-ca-cert-hash sha256:c282026afc2e329f4f80f6793966a906a75940bee4daeb36261ea383f69b4154按照提示执行

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config安装网络 插件,执行命令kubectl apply -f kube-flannel.yml

下面是kube-flannel.yml的内容

--- apiVersion: policy/v1beta1 kind: PodSecurityPolicy metadata: name: psp.flannel.unprivileged annotations: seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default spec: privileged: false volumes: - configMap - secret - emptyDir - hostPath allowedHostPaths: - pathPrefix: "/etc/cni/net.d" - pathPrefix: "/etc/kube-flannel" - pathPrefix: "/run/flannel" readOnlyRootFilesystem: false # Users and groups runAsUser: rule: RunAsAny supplementalGroups: rule: RunAsAny fsGroup: rule: RunAsAny # Privilege Escalation allowPrivilegeEscalation: false defaultAllowPrivilegeEscalation: false # Capabilities allowedCapabilities: ['NET_ADMIN'] defaultAddCapabilities: [] requiredDropCapabilities: [] # Host namespaces hostPID: false hostIPC: false hostNetwork: true hostPorts: - min: 0 max: 65535 # SELinux seLinux: # SELinux is unused in CaaSP rule: 'RunAsAny' --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: flannel rules: - apiGroups: ['extensions'] resources: ['podsecuritypolicies'] verbs: ['use'] resourceNames: ['psp.flannel.unprivileged'] - apiGroups: - "" resources: - pods verbs: - get - apiGroups: - "" resources: - nodes verbs: - list - watch - apiGroups: - "" resources: - nodes/status verbs: - patch --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: flannel roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: flannel subjects: - kind: ServiceAccount name: flannel namespace: kube-system --- apiVersion: v1 kind: ServiceAccount metadata: name: flannel namespace: kube-system --- kind: ConfigMap apiVersion: v1 metadata: name: kube-flannel-cfg namespace: kube-system labels: tier: node app: flannel data: cni-conf.json: | { "name": "cbr0", "cniVersion": "0.3.1", "plugins": [ { "type": "flannel", "delegate": { "hairpinMode": true, "isDefaultGateway": true } }, { "type": "portmap", "capabilities": { "portMappings": true } } ] } net-conf.json: | { "Network": "10.244.0.0/16", "Backend": { "Type": "vxlan" } } --- apiVersion: apps/v1 kind: DaemonSet metadata: name: kube-flannel-ds-amd64 namespace: kube-system labels: tier: node app: flannel spec: selector: matchLabels: app: flannel template: metadata: labels: tier: node app: flannel spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: beta.kubernetes.io/os operator: In values: - linux - key: beta.kubernetes.io/arch operator: In values: - amd64 hostNetwork: true tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.11.0-amd64 command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.11.0-amd64 command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run/flannel - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg --- apiVersion: apps/v1 kind: DaemonSet metadata: name: kube-flannel-ds-arm64 namespace: kube-system labels: tier: node app: flannel spec: selector: matchLabels: app: flannel template: metadata: labels: tier: node app: flannel spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: beta.kubernetes.io/os operator: In values: - linux - key: beta.kubernetes.io/arch operator: In values: - arm64 hostNetwork: true tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.11.0-arm64 command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.11.0-arm64 command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run/flannel - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg --- apiVersion: apps/v1 kind: DaemonSet metadata: name: kube-flannel-ds-arm namespace: kube-system labels: tier: node app: flannel spec: selector: matchLabels: app: flannel template: metadata: labels: tier: node app: flannel spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: beta.kubernetes.io/os operator: In values: - linux - key: beta.kubernetes.io/arch operator: In values: - arm hostNetwork: true tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.11.0-arm command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.11.0-arm command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run/flannel - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg --- apiVersion: apps/v1 kind: DaemonSet metadata: name: kube-flannel-ds-ppc64le namespace: kube-system labels: tier: node app: flannel spec: selector: matchLabels: app: flannel template: metadata: labels: tier: node app: flannel spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: beta.kubernetes.io/os operator: In values: - linux - key: beta.kubernetes.io/arch operator: In values: - ppc64le hostNetwork: true tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.11.0-ppc64le command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.11.0-ppc64le command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run/flannel - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg --- apiVersion: apps/v1 kind: DaemonSet metadata: name: kube-flannel-ds-s390x namespace: kube-system labels: tier: node app: flannel spec: selector: matchLabels: app: flannel template: metadata: labels: tier: node app: flannel spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: beta.kubernetes.io/os operator: In values: - linux - key: beta.kubernetes.io/arch operator: In values: - s390x hostNetwork: true tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.11.0-s390x command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.11.0-s390x command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run/flannel - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg执行完成后查询是否安装成功,需要等待3分钟左右

[root@k8s-node1 k8s]# kubectl get pods --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE kube-system coredns-6955765f44-2qrmj 1/1 Running 0 14m kube-system coredns-6955765f44-s4t2r 1/1 Running 0 14m kube-system etcd-k8s-node1 1/1 Running 0 14m kube-system kube-apiserver-k8s-node1 1/1 Running 0 14m kube-system kube-controller-manager-k8s-node1 1/1 Running 0 14m kube-system kube-flannel-ds-amd64-nlwhz 1/1 Running 0 2m4s kube-system kube-proxy-ml92v 1/1 Running 0 14m kube-system kube-scheduler-k8s-node1 1/1 Running 0 14m当都是running状态代表成功。

2.5.5 nodes节点加入master执行加入命令

kubeadm join 10.0.2.15:6443 --token njegi6.oj7rc4x6agiu1go2 \ --discovery-token-ca-cert-hash sha256:c282026afc2e329f4f80f6793966a906a75940bee4daeb36261ea383f69b4154此过程比较耗时,可以执行kubectl get pod -n kube-system -o wide查看状态

[root@k8s-node1 k8s]# kubectl get pod -n kube-system -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES coredns-6955765f44-2qrmj 1/1 Running 0 27m 10.244.0.2 k8s-node1 <none> <none> coredns-6955765f44-s4t2r 1/1 Running 0 27m 10.244.0.3 k8s-node1 <none> <none> etcd-k8s-node1 1/1 Running 0 27m 10.0.2.15 k8s-node1 <none> <none> kube-apiserver-k8s-node1 1/1 Running 0 27m 10.0.2.15 k8s-node1 <none> <none> kube-controller-manager-k8s-node1 1/1 Running 0 27m 10.0.2.15 k8s-node1 <none> <none> kube-flannel-ds-amd64-dhnt8 0/1 Init:0/1 0 108s 10.0.2.5 k8s-node3 <none> <none> kube-flannel-ds-amd64-nlwhz 1/1 Running 0 15m 10.0.2.15 k8s-node1 <none> <none> kube-flannel-ds-amd64-zqhzv 0/1 Init:0/1 0 115s 10.0.2.4 k8s-node2 <none> <none> kube-proxy-cvj7d 0/1 ContainerCreating 0 108s 10.0.2.5 k8s-node3 <none> <none> kube-proxy-m28l8 0/1 ContainerCreating 0 115s 10.0.2.4 k8s-node2 <none> <none> kube-proxy-ml92v 1/1 Running 0 27m 10.0.2.15 k8s-node1 <none> <none> kube-scheduler-k8s-node1 1/1 Running 0 27m 10.0.2.15 k8s-node1 <none> <none>,

[root@k8s-node1 k8s]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-node1 Ready master 12m v1.17.3 k8s-node2 Ready <none> 9m44s v1.17.3 k8s-node3 Ready <none> 6m35s v1.17.3

免责声明:本文仅代表文章作者的个人观点,与本站无关。其原创性、真实性以及文中陈述文字和内容未经本站证实,对本文以及其中全部或者部分内容文字的真实性、完整性和原创性本站不作任何保证或承诺,请读者仅作参考,并自行核实相关内容。文章投诉邮箱:anhduc.ph@yahoo.com